8-Bit Quantization and TensorFlow Lite: Speeding up mobile inference with low precision | by Manas Sahni | Heartbeat

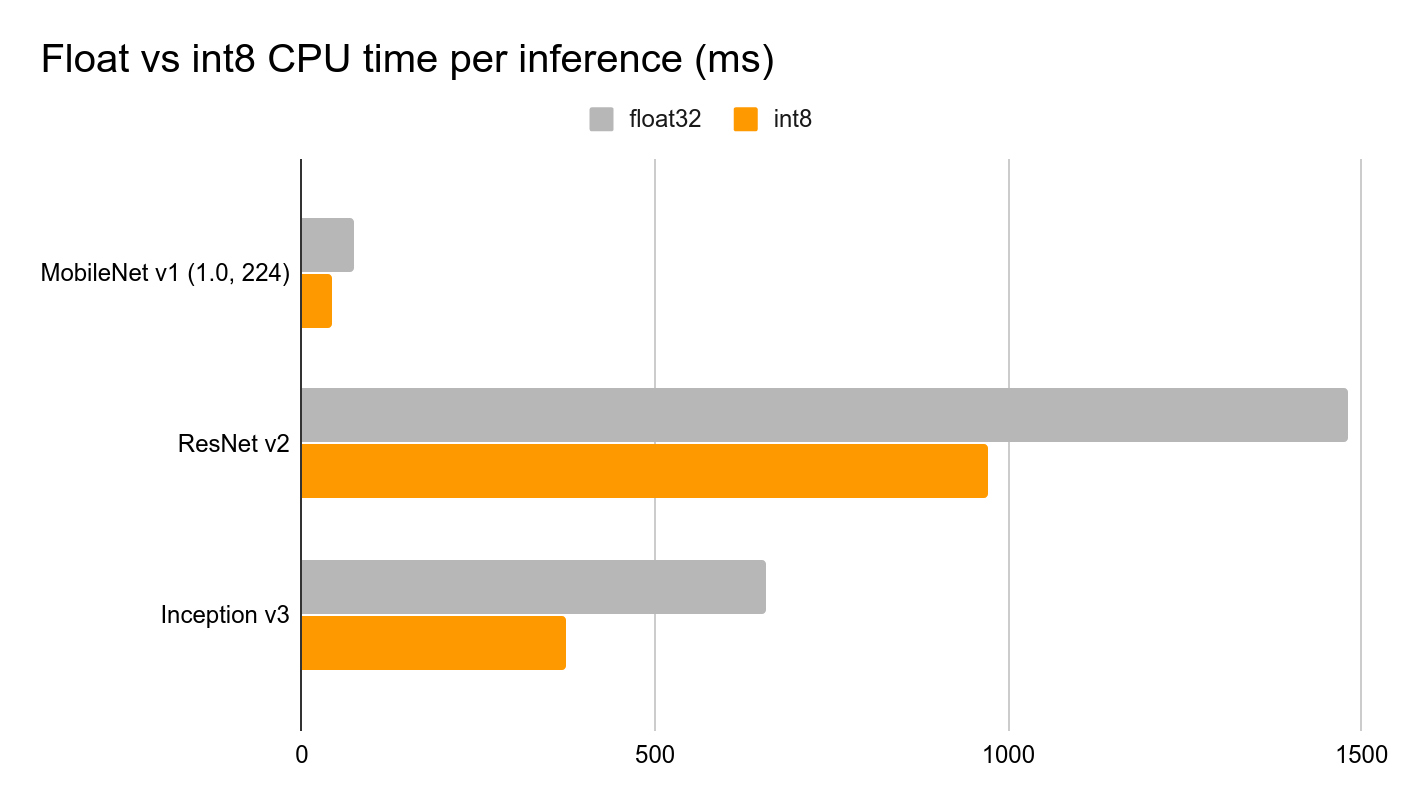

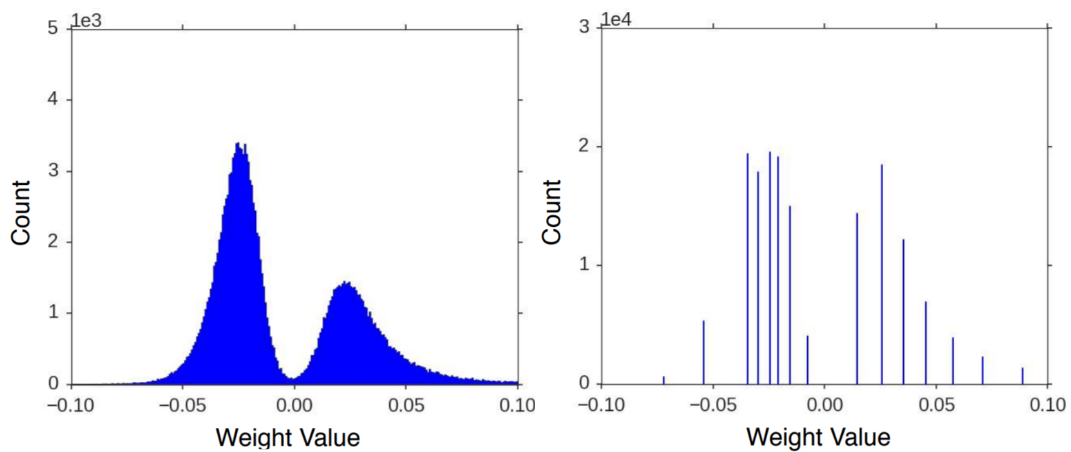

8-Bit Quantization and TensorFlow Lite: Speeding up mobile inference with low precision | by Manas Sahni | Heartbeat

8-Bit Quantization and TensorFlow Lite: Speeding up mobile inference with low precision | by Manas Sahni | Heartbeat

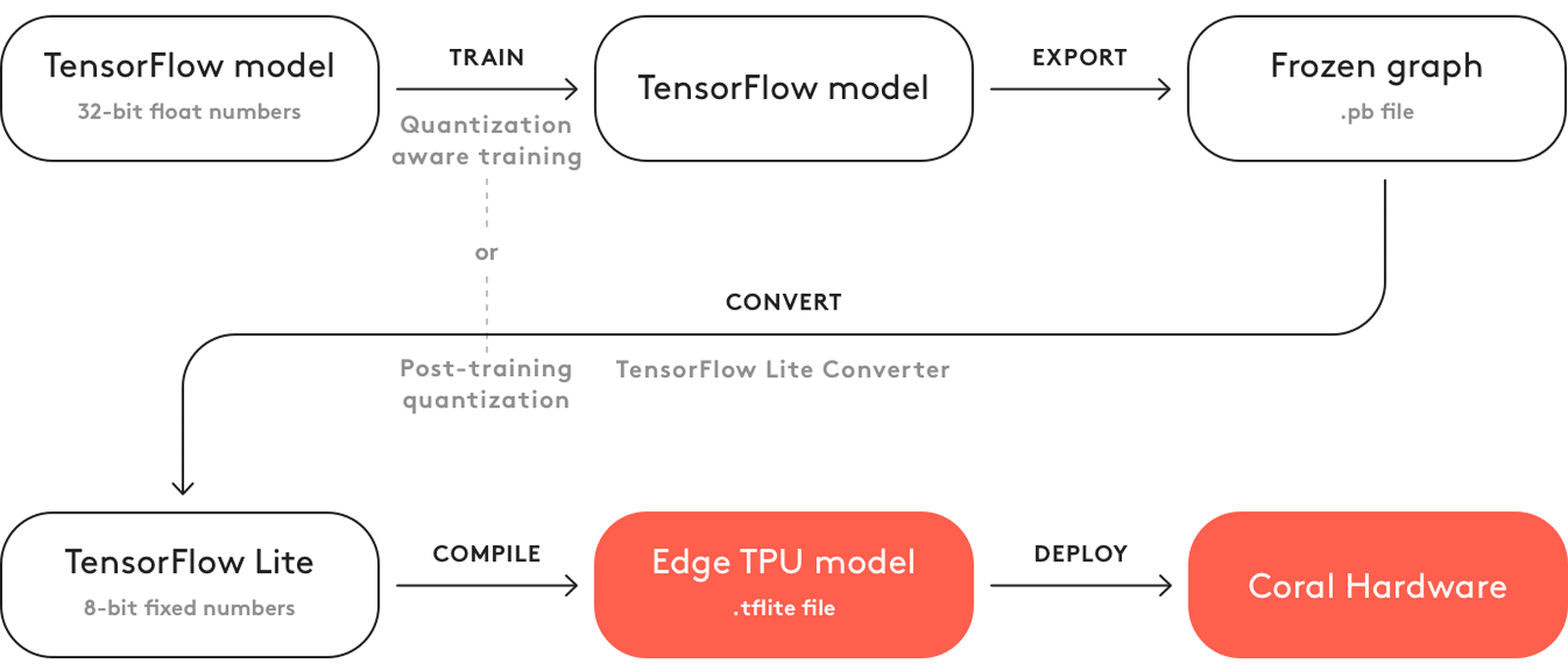

Quantization (post-training quantization) your (custom mobilenet_v2) models .h5 or .pb models using TensorFlow Lite 2.4 | by Alex G. | Analytics Vidhya | Medium

Hoi Lam 🇺🇦🇬🇧🇪🇺 on Twitter: "🚀New #TensorFlow Lite Android Support Library! Get more done with less boilerplate code for pre/post processing, quantization and label mapping: https://t.co/XyYJpZ9F4O Where are we going? 🎙️31 Oct

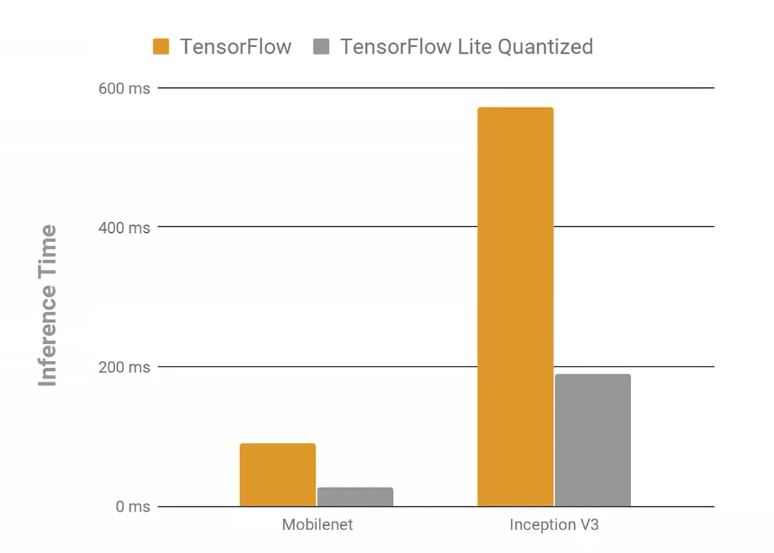

How TensorFlow Lite Optimizes Neural Networks for Mobile Machine Learning | by Airen Surzyn | Heartbeat

Quantized Conv2D op gives different result in TensorFlow and TFLite · Issue #38845 · tensorflow/tensorflow · GitHub